Supervised learning and unsupervised learning problems. In supervised learning problems, both the actual data and the ground truth is available. The algorithms are trained using the ground truth and then evaluated on unseen data. In unsupervised learning algorithms, the ground truth is not known and based on the similarities between different features, the records are grouped into clusters.

Machine learning problems can be broadly divided into two categories:

The supervised learning algorithms are further divided into two categories: classification and regression algorithms. In classification algorithms, the category to which an instance belongs is predicted. For instance, whether a message is a spam or ham; a bank note is real or fake; a tweet is positive or negative and so on. Regression algorithms, on the other hand, predict the continuous value i.e. the expected price of a house; the number of votes that a party is likely to get in general elections, the number of marks a student is expected to score etc.

In the previous posts (add links to previous three articles), we explained how different classification tasks such as sentimental analysis, banknote authentication and customer churn can be predicted using classification algorithms. In this article, we will see how regression algorithms are used to predict continuous value.

The Problem Definition

The problem we are going to solve in this article is the house price prediction problem. Based on certain features of the house, such as the area in square feet, the condition of the house, number of bedrooms, number of bathrooms, number of floors, year of built, we have to predict the estimated price of the house.

Where to Find the Data?

The data set for this problem along with all of its statistical details is freely available at this Kaggle Link. The dataset contains price record of different houses in Kings County, USA. The details of the dataset such as the minimum and maximum value for a particular column and histograms for each column are also available at the given link. To make things simpler, download the data into a local directory.

Importing Libraries and Dataset

As always, the first step to develop a machine learning problem is to import the required libraries along with the dataset. The following script import the required libraries:

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

import seaborn as snsAnd the following script imports the dataset:

house_data = pd.read_csv(‘D:housing_dataset.csv’)To see the number of rows and columns in the dataset we can use the “shape” attribute as shown below:

house_data.shapeIn the output you should see (21613, 21) which means that our algorithm has 21613 rows and 21 columns.

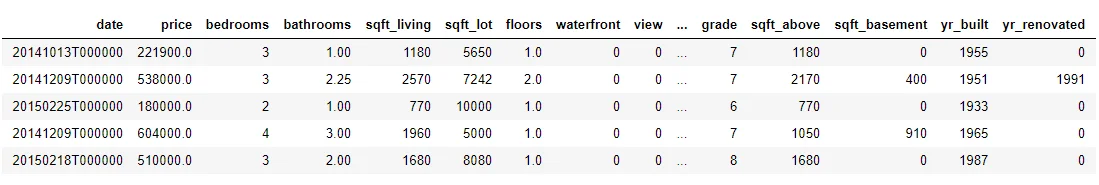

Let's see how the dataset actually looks. To do so, we can use the “head()” function as shown below:

house_data.head()Exploratory Data Analysis

The task is to predict price, depending upon all the other variables. The price, in this case, is our dependent variable while all the other features are dependent variables. Let's try to see if there is any relationship between price and some of the other features in our dataset.

Before drawing any plots, execute the following script to increase the plot size.

import matplotlib.pyplot as plt

fig_size = plt.rcParams[“figure.figsize”]

fig_size[0] = 10

fig_size[1] = 8

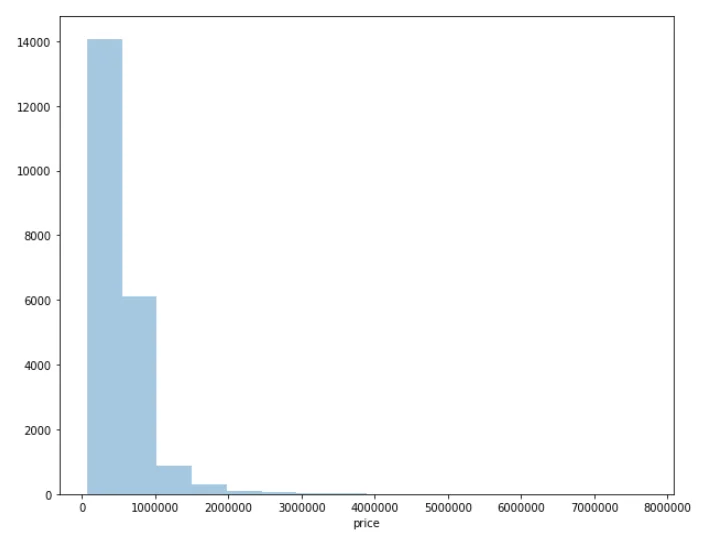

plt.rcParams[“figure.figsize”] = fig_sizeLet's first see the price distribution. Execute the following script:

sns.distplot(house_data[‘price’], kde=False, bins=8)The output looks like this:

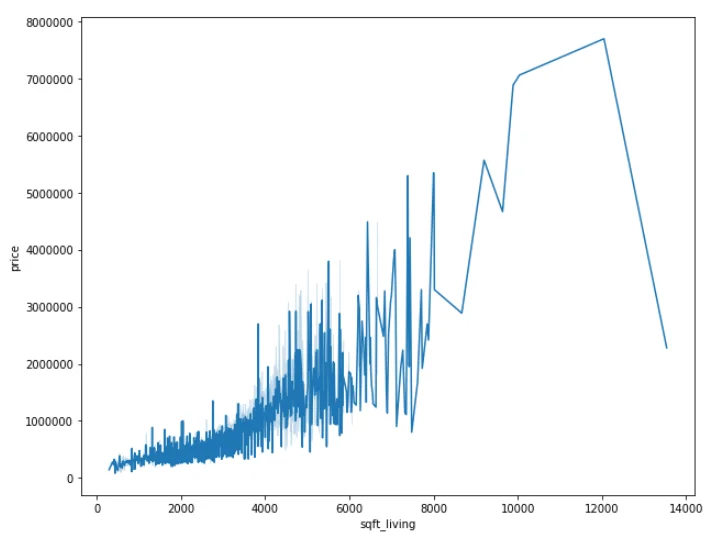

You can see that most of the houses are priced between 0 and 1 million. Next, let'see if there is any relationship between the area of the house in square feet and the price. We will use the “lineplot” from the “Seaborn” library to view this relationship as shown below:

sns.lineplot(x=”sqft_living”, y=”price”, data=house_data)The output looks like this:

From the output, it can be seen that there is a positive correlation between the area of the house and the price. However, if the area is too big, the price starts to decrease. One of the reasons can be the fact that there are very few buyers of the very big houses since huge houses are too expensive to be maintained.

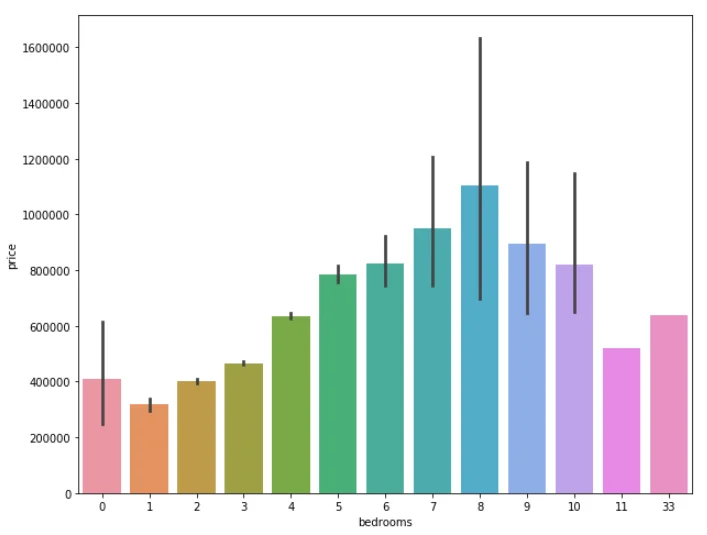

Next, let's find the relationship between the number of bedrooms and the price. Since the unique values for the bedroom columns are not too many, we can use a bar plot to draw this relationship. Execute the following script:

sns.barplot(x=’bedrooms’, y=’price’, data=house_data)Again the price of the house increased with the increase in the number of bathrooms and decreases in case of too many bathrooms. The reason can be the same, the houses with too many bathrooms are big and not so easy to maintain.

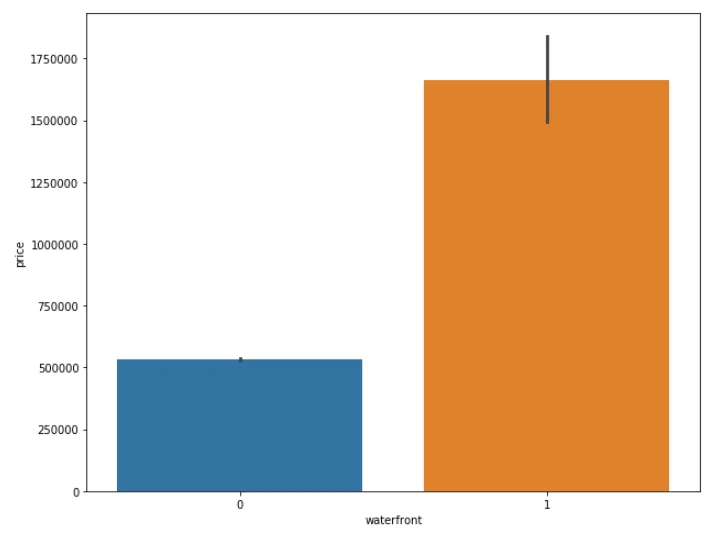

Next, let's see if we can find any difference between the prices of the houses with a waterfront and the houses without a waterfront.

sns.barplot(x=’waterfront’, y=’price’, data=house_data)The output looks like this:

It is clearly visible from the output that the houses with waterfront (orange bar) are far more expensive than those without the waterfront (blue bar). This shows that waterfront can really be a useful feature to predict the house price.

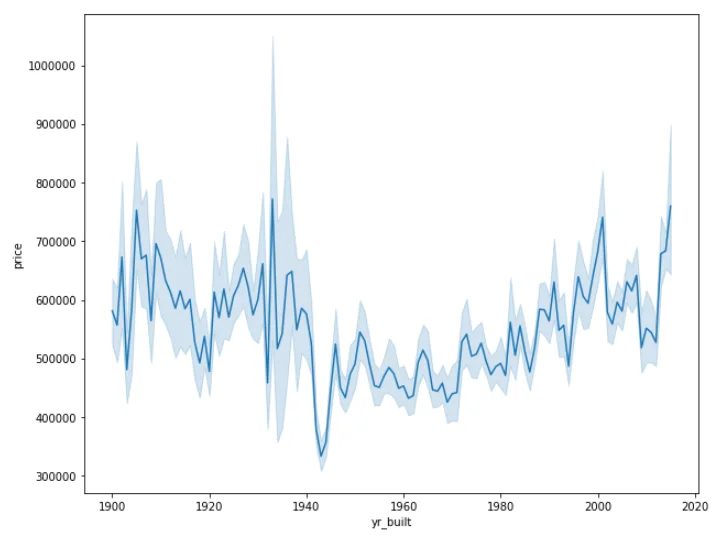

Let's now plot the relationship between the built year and the price of the house. Execute the following script:

sns.lineplot(x=”yr_built”, y=”price”, data=house_data)The output looks like this:

The relation of year of built and the price of the house is very interesting. The houses that are too old are expensive may be due to historical value. Similarly, the houses that are relatively newer are expensive too, which is self-explanatory. However the houses that are neither too old nor new have a lower price value since they have neither any historical value associated with them, nor they are new.

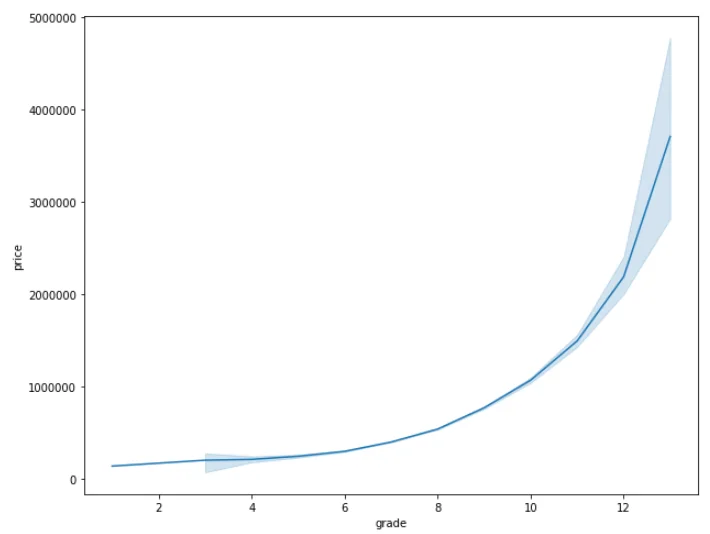

Next, let's find the relationship between grade and the price of the house. The grade is the value assigned by the King County Administration based on various factors. Let's plot a line plot between grade and house price.

sns.lineplot(x=”grade”, y=”price”, data=house_data)The output looks like this:

The grade and the price of the house have a clear positive correlation as evident from the output.

Enough of the data analysis, let's now train our regression algorithms on this dataset, but before that, we need to preprocess our data.

Data Preprocessing

In the preprocessing section, we will perform two tasks: dividing the data into feature and output set and then dividing the resultant data into training and test sets.

The following script divides the data into features and label set:

dataset_features = house_data.drop([‘price’, ‘id’, ‘date’], axis=1)

dataset_labels = house_data[‘price’]We drop the 'price', 'id' and 'date' columns from the feature set. The 'price' column is dropped since it contains the output predictions while 'id' and 'date' columns have been removed since they do not provide any useful information regarding the price of the house.

Next, the following script divides the data into training and test sets:

from sklearn.model_selection import train_test_split

train_features,test_features,train_labels,test_labels=

train_test_split(dataset_features,dataset_labels,test_size=0.2, random_state=21)Evaluating the Algorithms

We used two regression algorithms to train machine learning models. The models used are linear Regression and Random Forest Regression.

Linear Regression

Let's first train the linear regression model to see how well the trained model performs. Execute the following script:

from sklearn.linear_model import LinearRegression

regressor = LinearRegression()

regressor.fit(train_features, train_labels)We trained our model on the training set using the “fit()” method of the LinearRegression class from the sklearn.linear_model module.

Next, we need to make predictions on the test set. To do so, execute the following script:

predicted_price = regressor.predict(test_features)Now our model has been trained, the next step is to evaluate the performance of the model. The metrics used for the evaluation of linear regression model are root-mean-square error (RMSE), mean squared error (MSE), and mean absolute error (MAE).

The following script finds the value for these metrics for the linear regression algorithm:

from sklearn import metricsprint('Mean sAbsolute Error:', metrics.mean_absolute_error( test_labels, predicted_price)) print('Mean Squared Error:', metrics.mean_squared_error(test_labels,predicted_price)) print('Root Mean Squared Error:', np.sqrt(metrics.mean_squared_error(test_labels, predicted_price)))

The output looks like this:

Mean Absolute Error: 126522.39741578815

Mean Squared Error: 39089803087.07735

Root Mean Squared Error: 197711.41364897817The lesser the values for these metrics, the better is the performance of the algorithms. Let's see how random forest regressor performs on this data and compare the two algorithms.

Random Forest Regressor

Execute the following script to train the machine learning model using Random Forest Regressor.

from sklearn.ensemble import RandomForestRegressor

regressor = RandomForestRegressor(n_estimators=200, random_state=0)

regressor.fit(train_features, train_labels)The next step is to predict the output values:

predicted_price = regressor.predict(test_features)And finally, the script below finds the performance metrics values for the random forest algorithm:

from sklearn import metricsprint('Mean Absolute Error:', metrics.mean_absolute_error( test_labels, predicted_price)) print('Mean Squared Error:', metrics.mean_squared_error(test_labels,predicted_price)) print('Root Mean Squared Error:', np.sqrt(metrics.mean_squared_error(test_labels, predicted_price)))

The output of the script above looks like this:

Mean Absolute Error: 70467.01934555387

Mean Squared Error: 16251802750.754648

Root Mean Squared Error: 127482.55861393215Conclusion

From the output, it is visible that the random forest algorithm is better at predicting house prices for the Kings County housing dataset, since the values of MAE, RMSE, MSE for random forest algorithm are far less compared to the linear regression algorithm.

Interested to know more about our AI & ML expertise?

Coditude has delivered number of complex applications with Artificial Intelligence (AI) and Machine Learning (ML) algorithms to automate processes and simplify operations with higher precision. Coditude has comprehensive experience in providing services to AI & ML product companies and also with development of intelligent applications using AI and ML solutions.